Like most query languages, XQuery has several functions that can be used to manipulate and query data. SQL Server’s implementation supports a limited subset of the XQuery specification, but there’s a lot of power in the functions provided. I hope to cover some of those functions in more detail at a later date, but for now I’d like to focus on a couple of very specific functions (sql:variable() & sql:column()). These are proprietary extensions to XQuery, and they (like the xml methods I previously discussed) provide a bridge between the SQL engine and the XQuery engine.

For example, if you wanted to find the value of the third node of a simple XML document in SQL Server, you could do the following:

DECLARE @x XML ='<alpha>a</alpha><alpha>b</alpha><alpha>c</alpha>'

SELECT @x.value('(//alpha)[3]', 'varchar(1)')

The .value() method would return the letter “c” in the form of a varchar to the SQL engine. However, if you wanted to do this dynamically, and specify which node to return based on a parameter, you would use the sql:variable() function, like so:

DECLARE @x XML ='<alpha>a</alpha><alpha>b</alpha><alpha>c</alpha>'

DECLARE @node INT = 3

SELECT @x.value('(//alpha)[sql:variable("@node")][1]', 'varchar(1)')

The sql:variable() function uses a string literal (a value surrounded by double quotes) to reference a SQL parameter (in this case, @node) and concatenates it to the XQuery string. The above query is seen as:

(//alpha)[3][1]

by the XQuery engine. In English, we are looking for the 3rd node named alpha. You may wonder about the extra positional reference (“[1]”) ; the .value() method requires that a positional reference be explicitly defined. In this situation, we are telling the XQuery engine to return the first instance of the third node of the alpha node. Seems a bit clunky, but it works. Looking at the execution plan, we can see that this is a relatively complex process, with multiple calls between the two sides of the query processor:

The sql:column() function is similar, but is used to refer to a column instead of a parameter; this allows for the dynamic querying of an XML column on a row by row basis. For example:

DECLARE @T TABLE ( ID INT, x XML )

INSERT INTO @T

( ID, x )

VALUES ( 1, '<alpha>a</alpha><alpha>b</alpha><alpha>c</alpha>' ),

( 2, '<alpha>a</alpha><alpha>b</alpha><alpha>c</alpha>' ),

( 3, '<alpha>a</alpha><alpha>b</alpha><alpha>c</alpha>' )

SELECT ID, v=x.value('(//alpha)[sql:column("ID")][1]', 'varchar(1)')

FROM @T

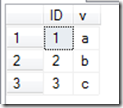

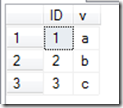

The above query will return a dataset like so:

Summary

SQL Server provides two functions for sharing information from the SQL engine to the XQuery engine: sql:variable() & sql:column(). The nature of these functions is pretty straight-forward; you pass the value of either a parameter or a column to an XML method, and it builds an XQuery string using the values of these functions.

SQL Server Hekaton, Microsoft’s new In-Memory table technology being shipped as part of SQL Server 2014, will completely change the way you think about data management. As a DBA, you’ll need to analyze your memory and storage needs completely differently. All Hekaton data is always stored in memory, and the data stored on disk is basically just a REDO log used to regenerate the contents of your memory-optimized tables. In this full-day seminar, Kalen Delaney (a SQL Server MVP for over 20 years) will show you the in-memory architecture for your Hekaton data and indexes, and discuss what gets written to disk during checkpoints, as well as what gets logged. She will explain how the recovery process recreates your Hekaton tables. Finally, she’ll go into detail on just what it is that makes Hekaton so much FASTER!

SQL Server Hekaton, Microsoft’s new In-Memory table technology being shipped as part of SQL Server 2014, will completely change the way you think about data management. As a DBA, you’ll need to analyze your memory and storage needs completely differently. All Hekaton data is always stored in memory, and the data stored on disk is basically just a REDO log used to regenerate the contents of your memory-optimized tables. In this full-day seminar, Kalen Delaney (a SQL Server MVP for over 20 years) will show you the in-memory architecture for your Hekaton data and indexes, and discuss what gets written to disk during checkpoints, as well as what gets logged. She will explain how the recovery process recreates your Hekaton tables. Finally, she’ll go into detail on just what it is that makes Hekaton so much FASTER!  In this session you will learn about SQL Server 2008 R2 and SQL Server 2012 performance tuning and optimization. Industry Expert Denny Cherry will guide you through tools and best practices for tuning queries and improving performance within Microsoft SQL Server. This session will guide you through real life performance problems which have been gathered and tuned using industry standard best practices and real world skills.

In this session you will learn about SQL Server 2008 R2 and SQL Server 2012 performance tuning and optimization. Industry Expert Denny Cherry will guide you through tools and best practices for tuning queries and improving performance within Microsoft SQL Server. This session will guide you through real life performance problems which have been gathered and tuned using industry standard best practices and real world skills.  The chances are that your organization has a centralized data repository, such as ODS or a data warehouse, but you might not use it to the fullest. Join this insightful full-day event to understand the importance of having a semantic layer that bridges users and data. In the Microsoft BI world, BISM consists of Power Pivot, Tabular, and Multidimensional.

The chances are that your organization has a centralized data repository, such as ODS or a data warehouse, but you might not use it to the fullest. Join this insightful full-day event to understand the importance of having a semantic layer that bridges users and data. In the Microsoft BI world, BISM consists of Power Pivot, Tabular, and Multidimensional.