In a previous post, I described how to use an Azure Logic App to update an Azure DevOps work item; in an effort to add additional automation to our processes, I’m starting to move more and more notifications and work items directly to Azure DevOps using Logic apps. For example, for one of our websites, we have an SSRS report that queries the database to determine if employees are compliant with password policies. We get a weekly email that we then have to copy and paste into Azure DevOps (to track the work), and then we do the work. I want to eliminate the email step.

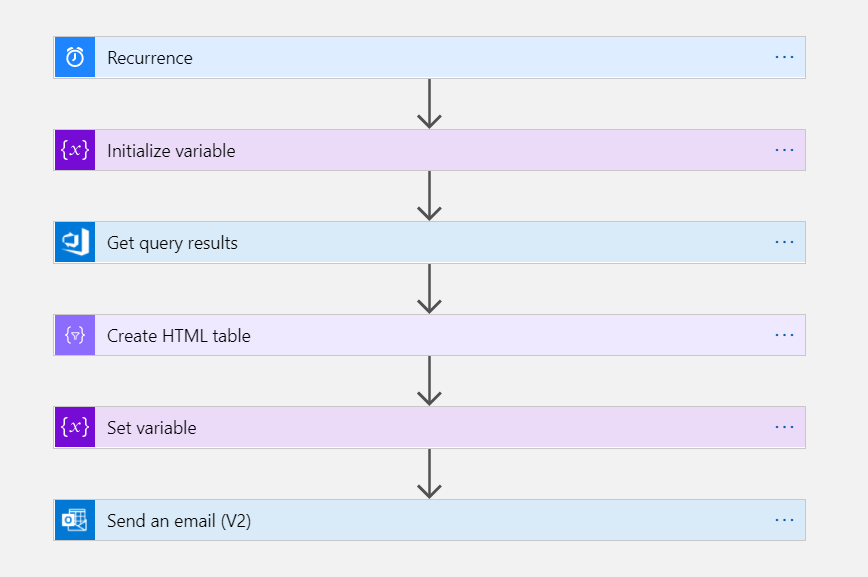

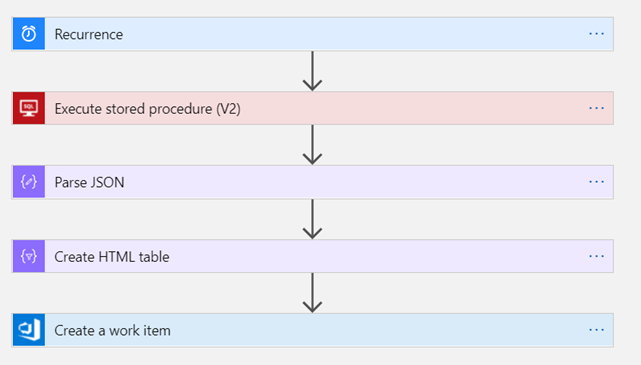

The workflow is very straightforward compared to updating an existing work item; there’s no need to manipulate a REST API for this. Connectors are all built in.

- Set up a schedule using the Recurrence item.

- Execute a stored procedure. This is the item that can be difficult to set up, especially if you have an on-premises SQL Server. In our case, I had to download and set up an on-premises data gateway, and then configure a connection to my SQL Server. Once that was done, I had to identify the database and stored procedure that contains the result set I wanted to add to the work item.

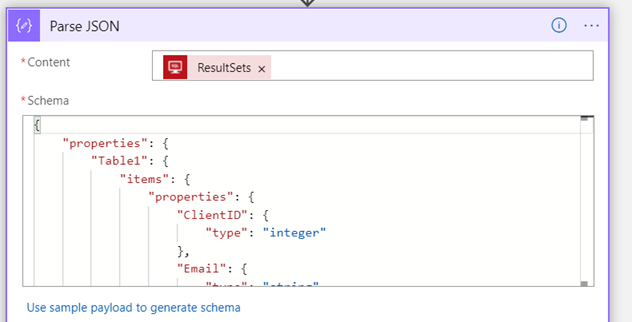

- The result set from the stored procedure is in JSON format; parsing the JSON allows it to be defined as individual elements that can be referenced by additional actions.

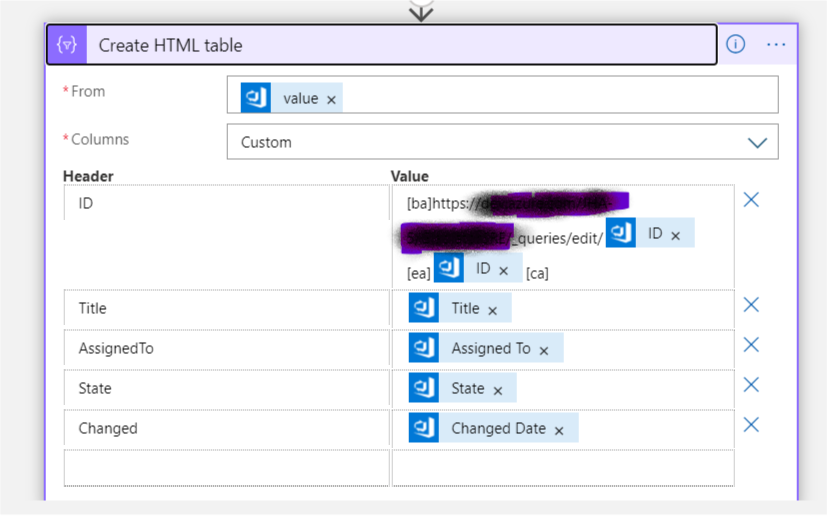

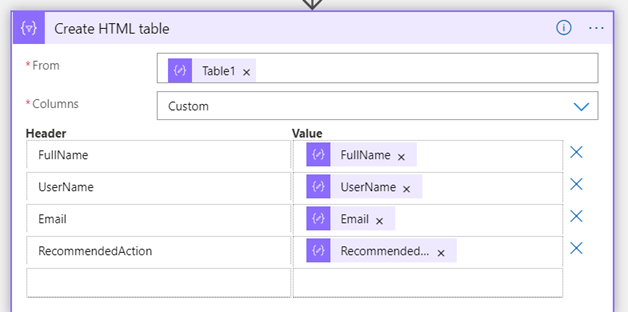

- I then take those elements and focus on the items of interest to construct an HTML table.

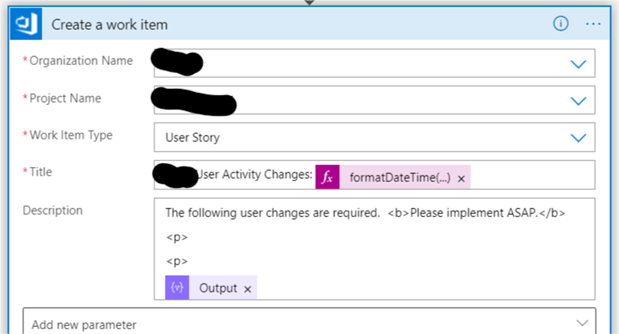

- I then create an Azure DevOps work item by adding the HTML table to the description field.

BONUS: I added a timestamp to the work item title by using the formatDateTime() function.

formatDateTime(utcNow(),'D')