As I’ve blogged previously, my responsibilities at work have shifted to focus more on the application of Site Reliability Engineering principles to the delivery of our business services to our customers. Unofficially, we’re calling my team Service Reliability Engineering for a few reasons. I thought I’d take some time to explain what the differences are, and why I think the name matters. I realize I’m just one lonely guy in the wilderness, and I’m going up against Google, but I think one word in the title is wrong. Before I explain why, let me explain what I do like about the title.

As I’ve blogged previously, my responsibilities at work have shifted to focus more on the application of Site Reliability Engineering principles to the delivery of our business services to our customers. Unofficially, we’re calling my team Service Reliability Engineering for a few reasons. I thought I’d take some time to explain what the differences are, and why I think the name matters. I realize I’m just one lonely guy in the wilderness, and I’m going up against Google, but I think one word in the title is wrong. Before I explain why, let me explain what I do like about the title.

Engineering defines consistency of methods.

I realize that engineering is an interesting terms these days, with lots of different definitions; you can even be sued if you call yourself an engineer inappropriately in the wrong jurisdiction. However, the term itself is widely used in technology careers to describe the systematic design and operation of complex systems. Most modern applications are actually comprised of several smaller applications, all in varying states of underlying complexity. Furthermore, the delivery of an application to an end user (particularly web applications) can span the entire spectrum from infrastructure to platform to software. Additionally, applications can vary in terms of scalability, configuration, and location. Engineering addresses complexity, not just complication through systematic processes; engineers experiment, learn, and integrate consistent practices into their daily processes.

Reliability refers to purpose.

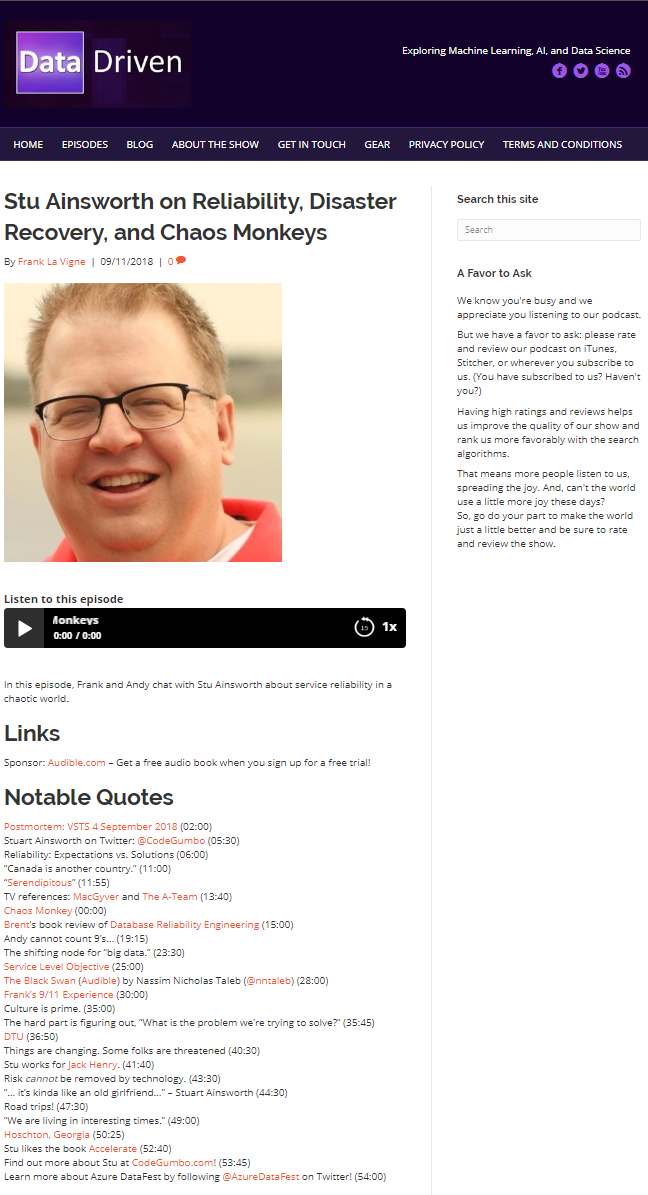

When your job title identifies reliability as a name, it means that you have a specific goal in mind, and that goal is not limited to a technology. Reliability engineers work with networking equipment, operating systems, applications, middleware, and/or database systems. They may specialize in a area (e.g., database reliability engineering is now a thing), but a robust team is comprised of necessary skill sets required to meet service level objectives across the entire technology stack. Reliability as a goal must first be defined, and then measured, and SRE responsibilities are responsible for measuring and addressing reliability across the entire spectrum, from infrastructure to platform to software. However, reliability measurement must also account for not only technological issues, but also the processes and people responsible for developing and operating the system. There’s a reason that a just culture is an integral part of the SRE experience (and the DevOps movement at large); people are responsible for how well technology performs, both in terms of defining expectations and day-to-day delivery of service. It only makes sense to look beyond technology when examining reliability, and that leads to where I disagree with the standard SRE nomenclature.

“Site” implies a technical focus; “Service” implies a business function.

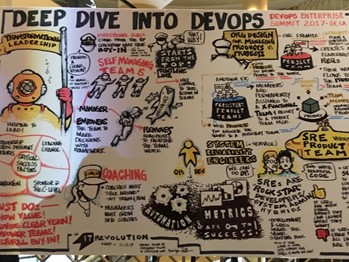

The word “Site” in the IT domain typically refers to either a physical location (data center site) or an application (web site); however, the heart of the definition is sociotechnical, not strictly technology. From an undated (seriously, Google?) interview with Ben Traynor, the founder of the SRE movement: “… we have a bunch of rules of engagement, and principles for how SRE teams interact with their environment — not only the production environment, but also the development teams, the testing teams, the users, and so on.” While the previous paragraph of that interview specifically focuses on the type of work that’s being done by Google’s SRE team, these rules of engagement show that SRE’s should be concerned with the entire value stream of service delivery including not only operations, but development, testing, and ultimately the end user experience. In, other words. SRE’s are concerned with the reliability of the whole service, not just the technical parts.

As I’ve

As I’ve

As many of you know, I’ve been slowly changing focus from database administration to DevOps, management, and service operations. Over the last few years, I’ve been heavily involved with migrating our datacenter to a private cloud infrastructure, and focusing on methods to improve the overall reliability and scalability of my company’s service deliverables. I’ve been a bit of an evangelist for organizational changes, and it’s finally official.

As many of you know, I’ve been slowly changing focus from database administration to DevOps, management, and service operations. Over the last few years, I’ve been heavily involved with migrating our datacenter to a private cloud infrastructure, and focusing on methods to improve the overall reliability and scalability of my company’s service deliverables. I’ve been a bit of an evangelist for organizational changes, and it’s finally official.