So, as usual, I’m struggling to sit down and write; I know I should (it’s good for the soul), but frankly, I’m struggling to put words to web page. As a method of jumpstarting my brain, I thought I would write about something simple and relevant to what I’m doing these days in my primary capacity: management.

When I took over the reins of a newly formed department of DBA’s, I knew that I needed to do something quick to demonstrate the value of our department to our recently re-organized company. We’re a small division in our company, but we manage some relatively large databases (17 TB of data, with a relatively high daily change rate; approximately 10 TB of data change daily). My team was comprised of senior DBA’s who were inundated with support requests (from “I need to know this information” to “I need help cleaning up this client’s data”); while they had monitoring structures in place, it wasn’t uncommon for things to go unnoticed (unplanned database growth, poor performing queries, etc.). One of my first acts as a manager was to put in a system of classification I called MARS; all of our work as DBA’s needed to be categorized into one of four broad groups.

Maintenance, Architecture, Research, and Support

The premise is simple; by categorizing efforts, we could measure where the focus of our department was, and begin to allocate resources into the proper arenas. I defined each of the four areas of work as such:

- Maintenance – the efforts needed to keep the system performing well; backups, security, pro-active query tuning, and general monitoring are examples.

- Architecture – work associated with the deployment of new features, functionality, or hardware; data sizing estimates, upgrades to SQL 2012, installation of Analysis services are examples. To be honest, Infrastructure may have been a better term, but MIRS sounded stupid.

- Research – the efforts to understand and improve employee skills; I’m a former teacher, and I put a pretty high value on lifelong learning. I want my team to be recognized as experts, and the only way that can happen is if the expectation is there for them to learn.

- Support – the 800 lb gorilla in our shop; support efforts focus on incident management (to use ITIL terms) and problem resolution. Support is usually instigated by some other group; for example, sales may request a contact list from our CRM that they can’t get through the interface, or we may get asked to explain why a ticket didn’t get generated for a customer.

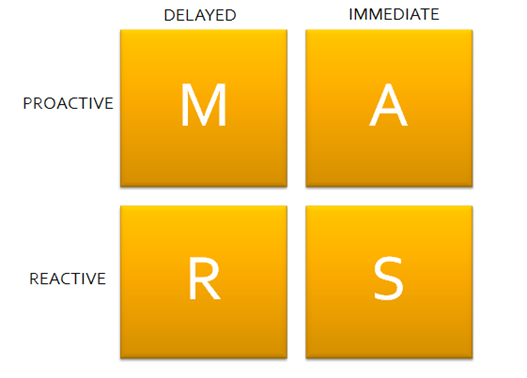

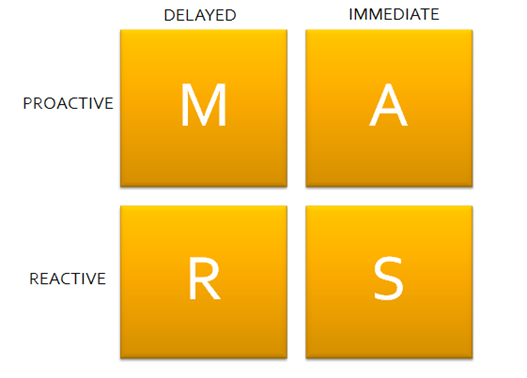

After about a year of data gathering, I went back and thought about the categories a bit more, and realized that I could associate some descriptive adjectives with the workload to demonstrate where the heart of our efforts lies. I took my cues from the JoHari window, and came up with the two axes: Actions: Proactive – Reactive, and Results: Delayed-Immediate. I then arranged my four categories along those lines, like so:

In other words, Maintenance was Proactive, but had Delayed results (you need to monitor your system for a while before you grasp the full impact of changes). Research was more Reactive, because we tend to research issues that are spawned by some stimulus (“what’s the best way to implement Analysis Services in a clustered environment?” came up as a Research topic because we have a pending BI project).

Immediate results came from Architectural changes; adding more spindles to our SAN changed our performance quickly, but there was Proactive planning involved before we made the change. Support is Reactive, but has Immediate results; the expectation is that support issues get prioritized, so we try to resolve those quickly as part of our Operational Level Agreements with other departments.

After a couple of months looking at our work load (using Kanban), I see that we still spend a lot of time in Support, but that effort is trending downward; I continue to push Maintenance, Architecture, and Research over Support, and we’re becoming much more proactive in our approaches. I’m not sure if this quadrant approach is the best way to represent workload, but it does give me a general rule-of thumb in helping guide our efforts.

SQL Server Hekaton, Microsoft’s new In-Memory table technology being shipped as part of SQL Server 2014, will completely change the way you think about data management. As a DBA, you’ll need to analyze your memory and storage needs completely differently. All Hekaton data is always stored in memory, and the data stored on disk is basically just a REDO log used to regenerate the contents of your memory-optimized tables. In this full-day seminar, Kalen Delaney (a SQL Server MVP for over 20 years) will show you the in-memory architecture for your Hekaton data and indexes, and discuss what gets written to disk during checkpoints, as well as what gets logged. She will explain how the recovery process recreates your Hekaton tables. Finally, she’ll go into detail on just what it is that makes Hekaton so much FASTER!

SQL Server Hekaton, Microsoft’s new In-Memory table technology being shipped as part of SQL Server 2014, will completely change the way you think about data management. As a DBA, you’ll need to analyze your memory and storage needs completely differently. All Hekaton data is always stored in memory, and the data stored on disk is basically just a REDO log used to regenerate the contents of your memory-optimized tables. In this full-day seminar, Kalen Delaney (a SQL Server MVP for over 20 years) will show you the in-memory architecture for your Hekaton data and indexes, and discuss what gets written to disk during checkpoints, as well as what gets logged. She will explain how the recovery process recreates your Hekaton tables. Finally, she’ll go into detail on just what it is that makes Hekaton so much FASTER!  In this session you will learn about SQL Server 2008 R2 and SQL Server 2012 performance tuning and optimization. Industry Expert Denny Cherry will guide you through tools and best practices for tuning queries and improving performance within Microsoft SQL Server. This session will guide you through real life performance problems which have been gathered and tuned using industry standard best practices and real world skills.

In this session you will learn about SQL Server 2008 R2 and SQL Server 2012 performance tuning and optimization. Industry Expert Denny Cherry will guide you through tools and best practices for tuning queries and improving performance within Microsoft SQL Server. This session will guide you through real life performance problems which have been gathered and tuned using industry standard best practices and real world skills.  The chances are that your organization has a centralized data repository, such as ODS or a data warehouse, but you might not use it to the fullest. Join this insightful full-day event to understand the importance of having a semantic layer that bridges users and data. In the Microsoft BI world, BISM consists of Power Pivot, Tabular, and Multidimensional.

The chances are that your organization has a centralized data repository, such as ODS or a data warehouse, but you might not use it to the fullest. Join this insightful full-day event to understand the importance of having a semantic layer that bridges users and data. In the Microsoft BI world, BISM consists of Power Pivot, Tabular, and Multidimensional.