I recently helped a friend solve an XML problem, and thought I would post the solution here. Although there are lots of notes on how to use XQuery in SQL Server 2005+, this was a real world scenario that was trickier than I expected. The friend works for an insurance company broker, and in one of their applications, accident questionnaires (and their answers) are stored in XML. This allows them to treat all questionnaires as the same, regardless of their origin as long as the QuestionCodes are common across vendors.

Below is the sample data that he was asking me about; he needed to get one question and answer per row into a data set:

DECLARE @T TABLE ( RowID INT, Fragment XML )

INSERT INTO @T

( RowID, Fragment )

VALUES ( 1, '<Questionnaire xmlns="http://www.xxx.com/schemas/xxxXXXXXxxx.0">

<Question>

<QuestionCode>74</QuestionCode>

<Question>Why did you wreck your car?</Question>

<Answer>I was drunk</Answer>

<Explanation />

</Question>

<Question>

<QuestionCode>75</QuestionCode>

<Question>Why is the rum all gone?</Question>

<Answer>Because I drank it.</Answer>

<Explanation />

</Question>

</Questionnaire>' )

, ( 2, '<Questionnaire xmlns="http://www.xxx.com/schemas/xxxXXXXXxxx.0">

<Question>

<QuestionCode>74</QuestionCode>

<Question>Why did you wreck your car?</Question>

<Answer>Stuart was drunk</Answer>

<Explanation />

</Question>

<Question>

<QuestionCode>75</QuestionCode>

<Question>Why is the rum all gone?</Question>

<Answer>Because I made mojitos.</Answer>

<Explanation />

</Question>

</Questionnaire>' )

I thought it was a simple query; simply use the .nodes() method to rip each of the questions and corresponding answers into their own rows, but for some reason, when I ran the following, I got interesting results:

SELECT t.RowID

, QuestionCode = t1.frag.value('(QuestionCode)[1]', 'int')

, Question = t1.frag.value('(Question)[1]', 'varchar(max)')

, Answer = t1.frag.value('(Answer)[1]', 'varchar(max)')

, Explanation = t1.frag.value('(Explanation)[1]', 'varchar(max)')

FROM @t t

CROSS

APPLY Fragment.nodes('//Questionnaire/Question') AS t1 ( frag )

RowID QuestionCode Question Answer Explanation

That’s right, nothing. Strange, considering I’ve done variations of this query for a couple of years now to parse out firewall data fragments. I looked closer, and tried to see what was different about the XML fragment from this example compared to mine, and it was clear: a namespace reference. Most of the data I deal with is not true XML, but rather fragments I convert to XML in order to facilitate easy transformations. To test, I stripped the namespace line (xmlns="http://www.xxx.com/schemas/xxxXXXXXxxx.0" ) out, and voila! Results!

RowID QuestionCode Question Answer Explanation 1 74 Why did you wreck your car? I was drunk 1 75 Why is the rum all gone? Because I drank it. 2 74 Why did you wreck your car? Stuart was drunk 2 75 Why is the rum all gone? Because I made mojitos.

Well, that was great, because it showed me where the problem was but how do I fix it? I stumbled upon a solution, but to be honest, I’m not sure it’s the best one. If I modify my query to refer to any namespace (the old wildcard: *) like so:

SELECT t.RowID

, QuestionCode = t1.frag.value('(*:QuestionCode)[1]', 'int')

, Question = t1.frag.value('(*:Question)[1]', 'varchar(max)')

, Answer = t1.frag.value('(*:Answer)[1]', 'varchar(max)')

, Explanation = t1.frag.value('(*:Explanation)[1]', 'varchar(max)')

FROM @t t

CROSS

APPLY Fragment.nodes('//*:Questionnaire/*:Question') AS t1 ( frag )

I get the correct results.

RowID QuestionCode Question Answer Explanation 1 74 Why did you wreck your car? I was drunk 1 75 Why is the rum all gone? Because I drank it. 2 74 Why did you wreck your car? Stuart was drunk 2 75 Why is the rum all gone? Because I made mojitos.

Here’s the question for any XML guru that stumbles along the way; is there a better way to do this?

![hal[1] hal[1]](http://codegumbo.com//images/TSQL2sDay003Maslowandrelationaldesign_B47C/hal1_thumb.jpg)

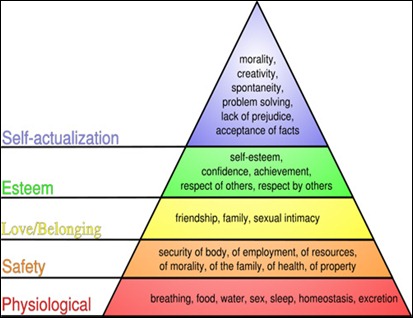

I’ve been reading Aaron Bertrand’s great series of blog posts on bad habits to kick, and have been thinking to myself: what are some good habits that SQL Server developers should implement? I spend most of my day griping about bad design from vendors, yet I hardly ever take the time to document what should be done instead. This post is my first attempt to do so, and it’s based on the following assumptions:

I’ve been reading Aaron Bertrand’s great series of blog posts on bad habits to kick, and have been thinking to myself: what are some good habits that SQL Server developers should implement? I spend most of my day griping about bad design from vendors, yet I hardly ever take the time to document what should be done instead. This post is my first attempt to do so, and it’s based on the following assumptions:

No, what bugs me, is that out of the 6 answers posted, 3 of them involve CURSORS. For a SQL question. I know. Shock and horror.

No, what bugs me, is that out of the 6 answers posted, 3 of them involve CURSORS. For a SQL question. I know. Shock and horror.