This month’s T-SQL Tuesday is being hosted by Aaron Nelson [blog | twitter]; since I’m posting this late in the day, I got a chance to sneak a peek at some of the other entries, and most of them were great technical discussions on how to log, what to log, and why you should log. I’m not feeling technical today; today’s a conceptual day. So, rather than write about the pros and cons of logging, I thought I would ask you to step back and consider who is your audience?

At my company, we monitor logs for our customers; I’ve had to reverse engineer a bunch of different log formats in my day, and there are some basic principles behind good logging practices; here’s my stab at them:

1. Logging without analysis is useless storage of information.

I realize that our jobs as data professionals have gotten more exciting because of policy requirements that insist upon the storage of all kinds of information for exceedingly long periods of time. I recently read a requirement that said a company must maintain source code for 20 years; that’s a heckuva long time to keep a log of changes around. Unfortunately, if no one ever looks at the log, then why store it? If you’re going to be storing information, you need to have some process that consumes that information in order to get the most value out of it. Good logging processes assume that someone will be reviewing the log at some point, and using that information to act.

2. Logging structures should be flexible.

If you are logging information with the mindset that someone will be reviewing the log in the future, then you need to balance precision (i.e, gathering adequate information to describe the logged event) with saturation (don’t over-log; not every event is always important). For example, if you’re building an audit log to track changes to a customer account, you want to be able to isolate “risky” behavior from normal account maintenance. If your logs become lengthy result-sets filled with change statements, it’s easy to overlook important events such as a bad command.

Most logging structures attempt to overcome this by having some sort of categorical typing appended to the log; in other words, if we think in tabular terms, the columns of a log dataset might look like:

- DateOfEvent – datetime

- Category – Classification of the event (Error, Information, etc)

- Severity – Some warning of how important the event is

- ErrorCode – Some short (usually numeric) code that has meaning extrensic to the system

- Message – The payload; a string description of what happened.

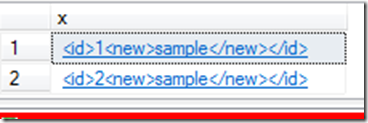

It becomes relatively easy to isolate the error messages from informational messages; however, how do you search non-categorical information with the message itself? For example, if you want to determine that there was a specific error code associated with a specific logon, and that logon information is embedded in the message of your log file, how do you search it? The easy answer is to use wildcards, but is there a better way? In my experience, good logs use some form of intra-message tagging to isolate key elements within the message; the log file remains simple for quick searches, but can easily be adapted for more in-depth searches. I like XML attributes for payload logging; it’s easy to implement, and can be parsed quickly. For example:

acct=”Stuart Ainsworth” msg=”Access Denied” object=”SuperSecretPage.aspx”

is an easy message to shred and look for all denied attempts on SuperSecretPage.aspx. If I wanted to look for all activity by Stuart Ainsworth, I could do that as well.

3. Logging should have a maintenance plan.

If you log a lot, you know that logs fill up quickly. How long do you retain your logs (and the answer shouldn’t be “until the drive fills up”)? Too much information that is easily accessible is both a security risk and a distraction; if you’re trying to find out about a recent transaction by a user, do you really need to know that they’ve been active for the last 10 years? Also, if your log file does fill up, is your only option to “nuke it” in order to keep it running and collecting new information?

A good logging strategy will have some sort of retention plan, and a method of maintaining older log records separately from new ones. Look at SQL Server error logs, for example; every time the service starts, a new log file is created. Is that the best strategy? I don’t think so, but it does isolate older logs from newer ones. If you’re designing a logging method, be sure to figure out a way to keep old records separate from new ones; periodically archive your logs, and find a way to focus your resources on the most recent and relevant information.