So a few weeks ago, I mentioned that I was starting to diversify my data interests in hopes of steering my career path a bit; I’ve built a home brewed server, and downloaded a copy of the Hortonworks Sandbox for Hadoop. I’ve started working through a few tutorials, and thought I would share my experiences so far.

My setup….

I don’t have a lot of free cash to setup a super-duper learning environment, but I wanted to do something on-premise. I know that Microsoft has HDInsight, the cloud-based version of Hortonworks, but I’m trying to understand the administrative side of Hadoop as well as the general interface. I opted to upgrade my old fileserver to a newer rig; costs ran about $600 for the following:

ASUS|M5A97 R2.0 970 AM3+ Motherboard

AMD|8-CORE FX-8350 4.0G 8M CPU

8Gx4|GSKILL F3-1600C9Q-32GSR Memory

DVD BURN SAMSUNG | SH-224DB/BEBE DVD Burner

I already had a case, power supply, and a couple of SATA drives (sadly, my IDE’s no longer work; also the reason for purchasing a DVD burner). I also had a licensed copy of Windows 7 64 bit, as well as a few development copies for Microsoft applications from a few years ago (oh, how I wish I was an MVP….).

As a sidebar, I will NEVER purchase computer equipment from Newegg again; their customer service was horrible. A few pins were bent on the CPU, and it took nearly 30 days to get a replacement, and most of that time was spent with little or no notification.

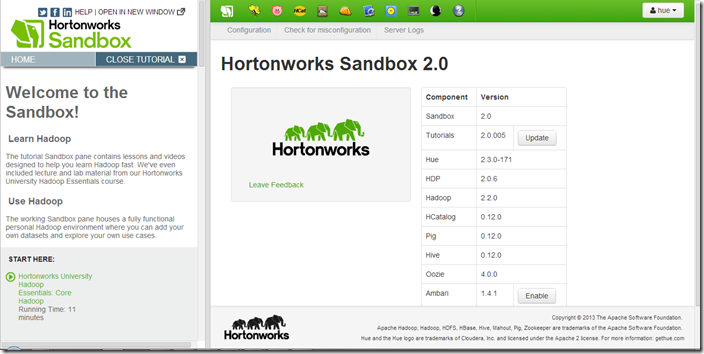

I downloaded and installed the Hortonworks Sandbox using the VirtualBox version. Of course, I had to reinstall after a few tutorials because I had skipped a few steps; after going back and following the instructions, everything is just peachy. One of the nice benefits of the Virtualbox setup is that once I fire up the Hortonworks VM on my server, I can use a web browser on my laptop pointed to the server’s IP address with the appropriate port added (e.g., xxx.xxx.xxx.xxx:8888), and bam, I’m up and running.

Working my way through a few tutorials

First, I have to say, I really like the way the Sandbox is organized; it’s basically two frames: the tutorials on the left, and the actual interface into a working version of Hadoop on the right. It makes it very easy to go through the steps of the tutorial.

The Sandbox has lots of links and video clips to help augment the experience, but it’s pretty easy to get up and running on Hadoop; after only a half-hour or so of clicking through the first couple of tutorials, I got some of the basics down for understanding what Hadoop is (and is not); below is a summary of my initial thoughts (WARNING: these may change as I learn more).

Summary:

- Hadoop is comprised of several different data access components, all of which have their own history. Unlike a tool like SQL Server Management Studio, the experience may vary depending on what tool you are using at a given time. The tools include (but are not limited to):

- Beeswax (Hive UI): Hive is a SQL-like language, and so the UI is probably the most familiar to those of us with RDBMS experience. It’s a query editor.

- Pig is a procedural language that abstracts the data manipulation away from MapReduce (the underlying engine of Hadoop). Pig and Hive have some overlapping capabilities, but there are differences (many of which I’m still learning).

- HCatalog is a relational abstraction of data across HDFS (Hadoop Distributed File System); think of it like the DDL of SQL. It defines databases and tables from the files where your actual data is stored; Hive and Pig are like DML, interacting with the defined tables.

- A single-node Hadoop cluster isn’t particularly interesting; the fun part will come later when I set up additional nodes.